|

I am currently a second-year Ph.D. student in the School of Computer Science and Engineering at Georgia Institute of Technology. I am very fortunate to be advised by Kai Wang. My research interests lie primarily in machine learning, optimization, and AI for social impact. Currently, I mainly study on topics related to bilevel optimization and decision-focused learning. Email / CV / Google Scholar / Github / Blogs |

|

|

|

|

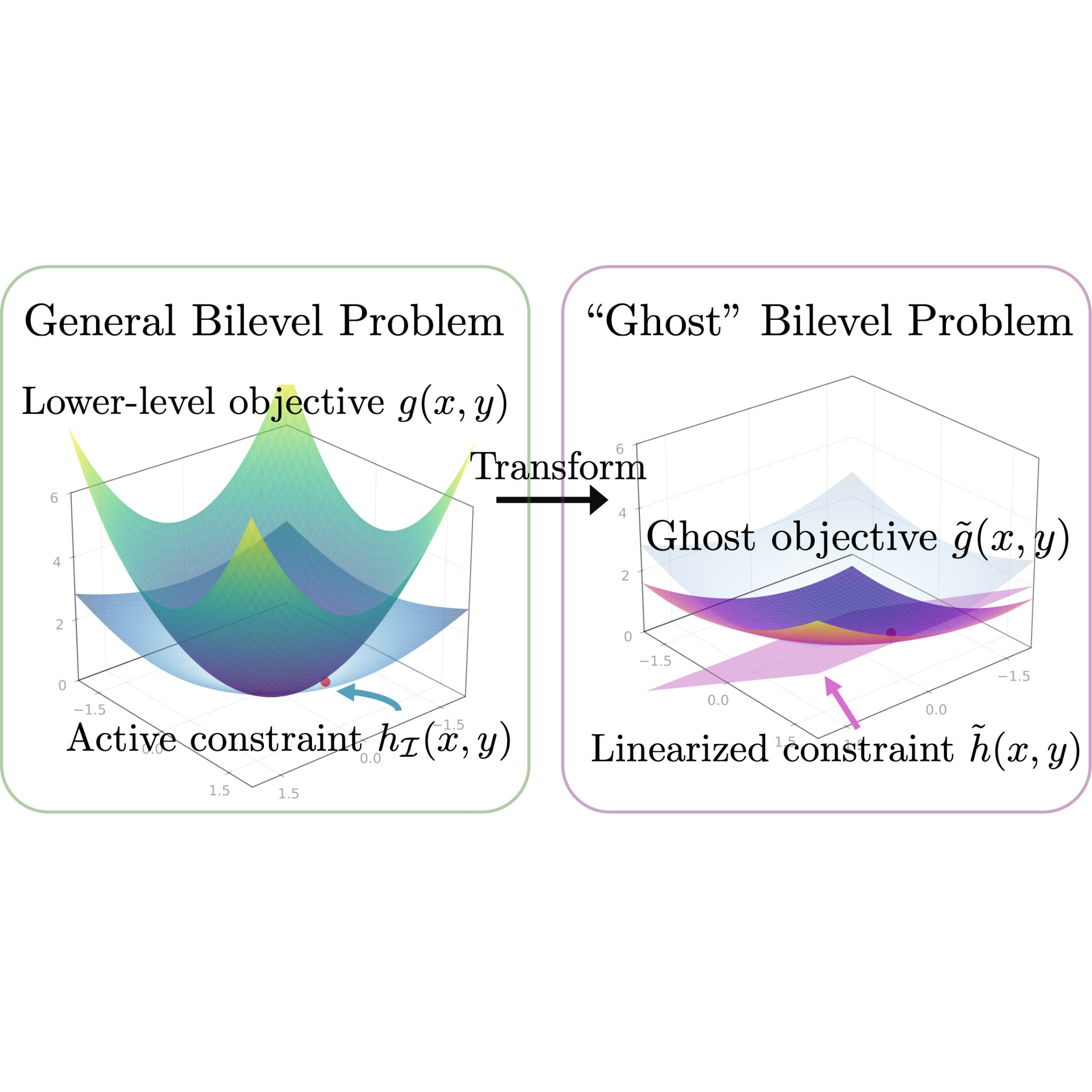

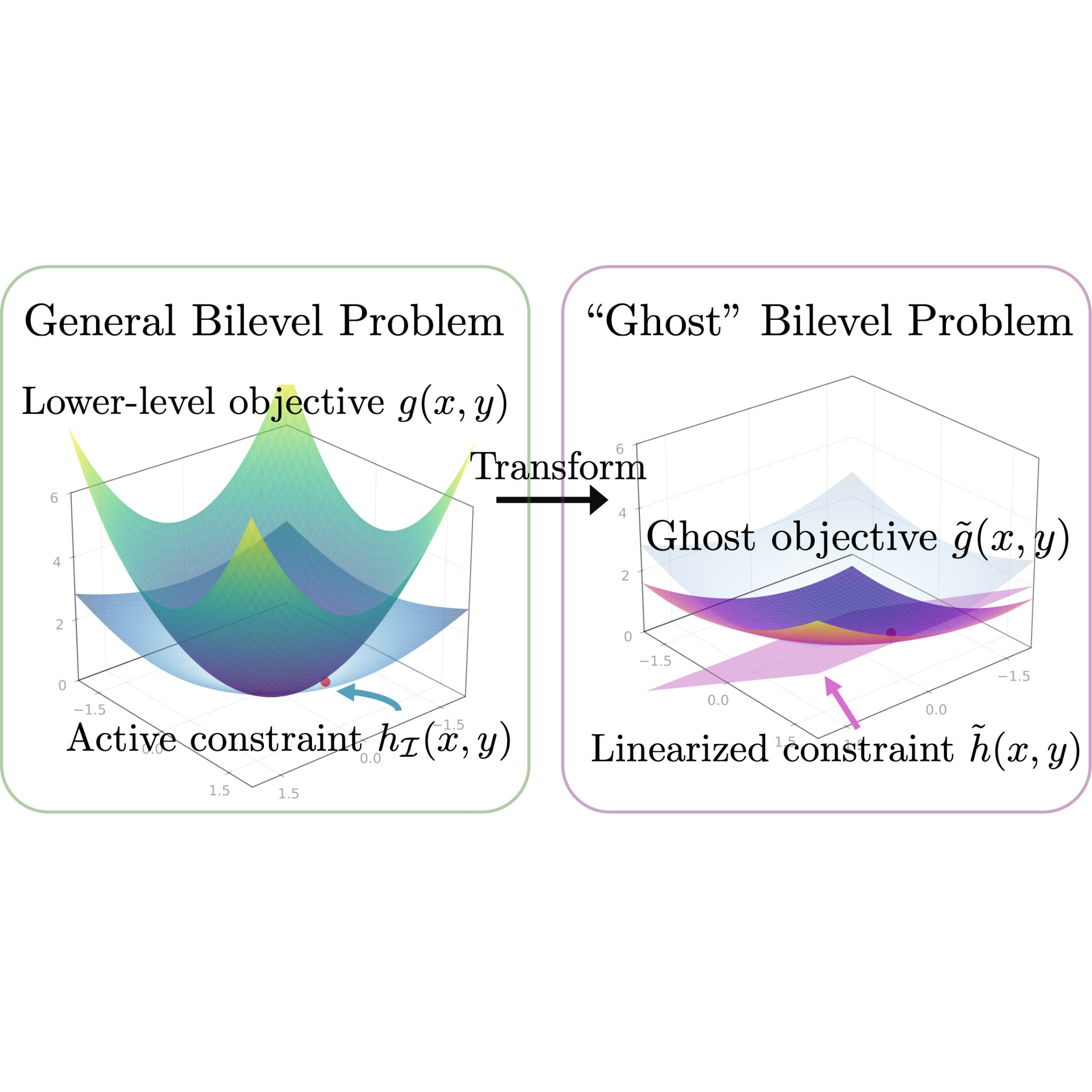

Zihao Zhao*, Kai-Chia Mo*, Shing-Hei Ho, Brandon Amos, Kai Wang arXv preprint. We introduce an active-set Lagrangian hypergradient oracle that avoids Hessian evaluations and provides finite-time, non-asymptotic approximation guarantees. We show that an approximate hypergradient can be computed using only first-order information in $\tilde{\mathcal{O}}(1)$ time, leading to an overall complexity of $\tilde{\mathcal{O}}(\delta^{-1}\epsilon^{-3})$ for constrained bilevel optimization, which matches the best known rate for non-smooth non-convex optimization. |

|

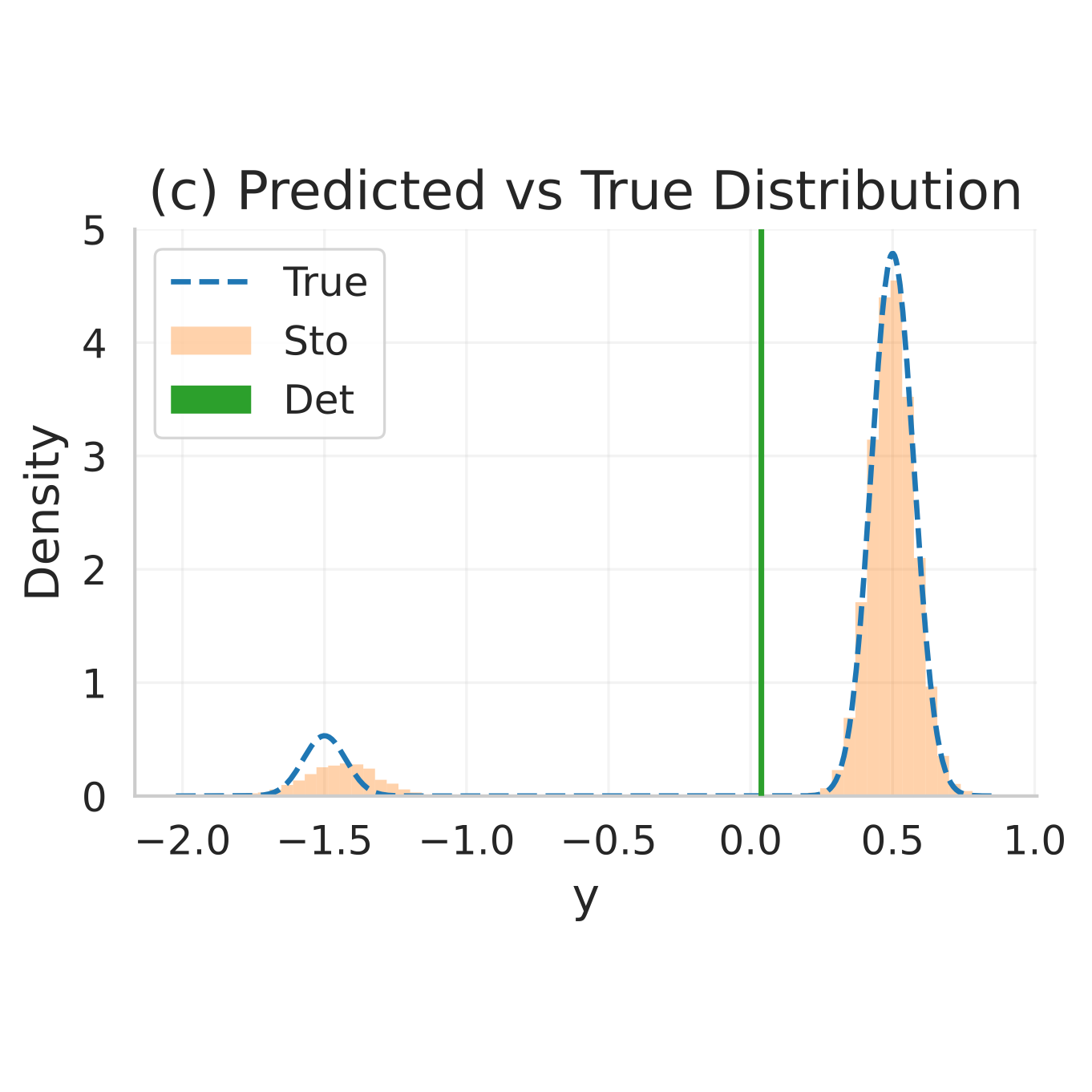

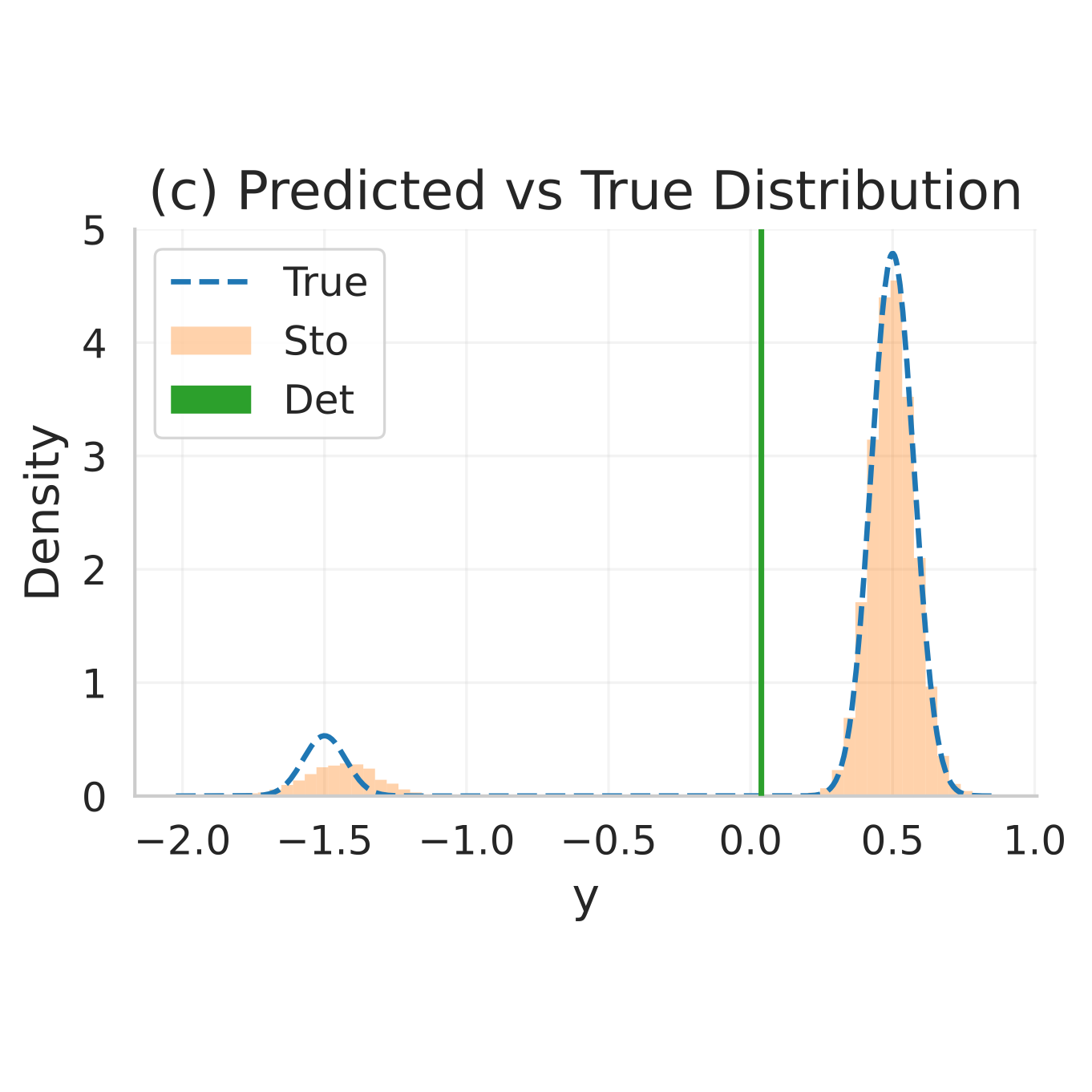

Zihao Zhao, Christopher Yeh, Lingkai Kong, Kai Wang International Conference on Learning Representations (ICLR), 2026. We propose the first diffusion-based DFL approach for stochastic optimization, which trains a diffusion model to capture complex uncertainty in problem parameters. We develop two end-to-end training techniques to integrate the diffusion model into decision-making: reparameterization and score function approximation. |

|

|

|

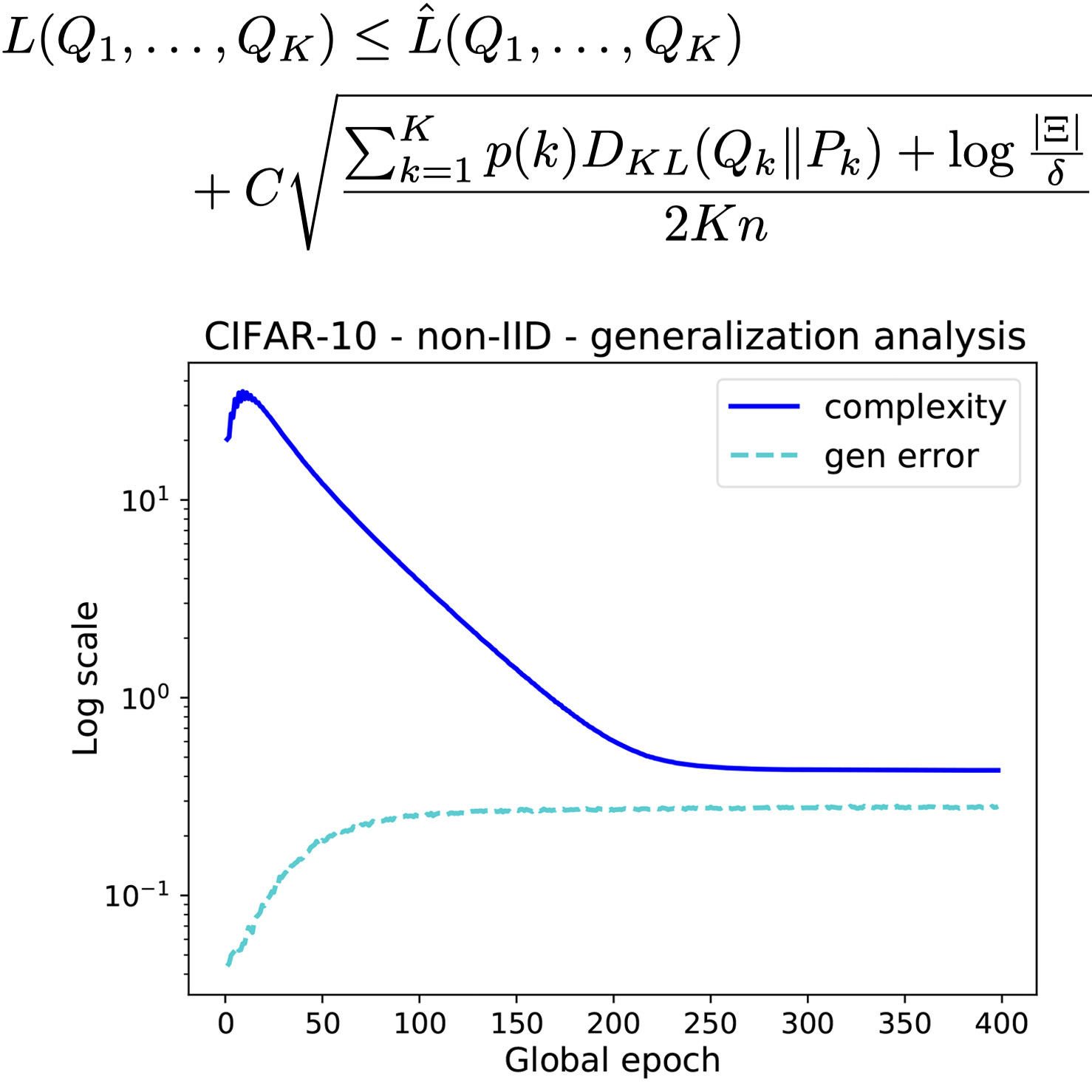

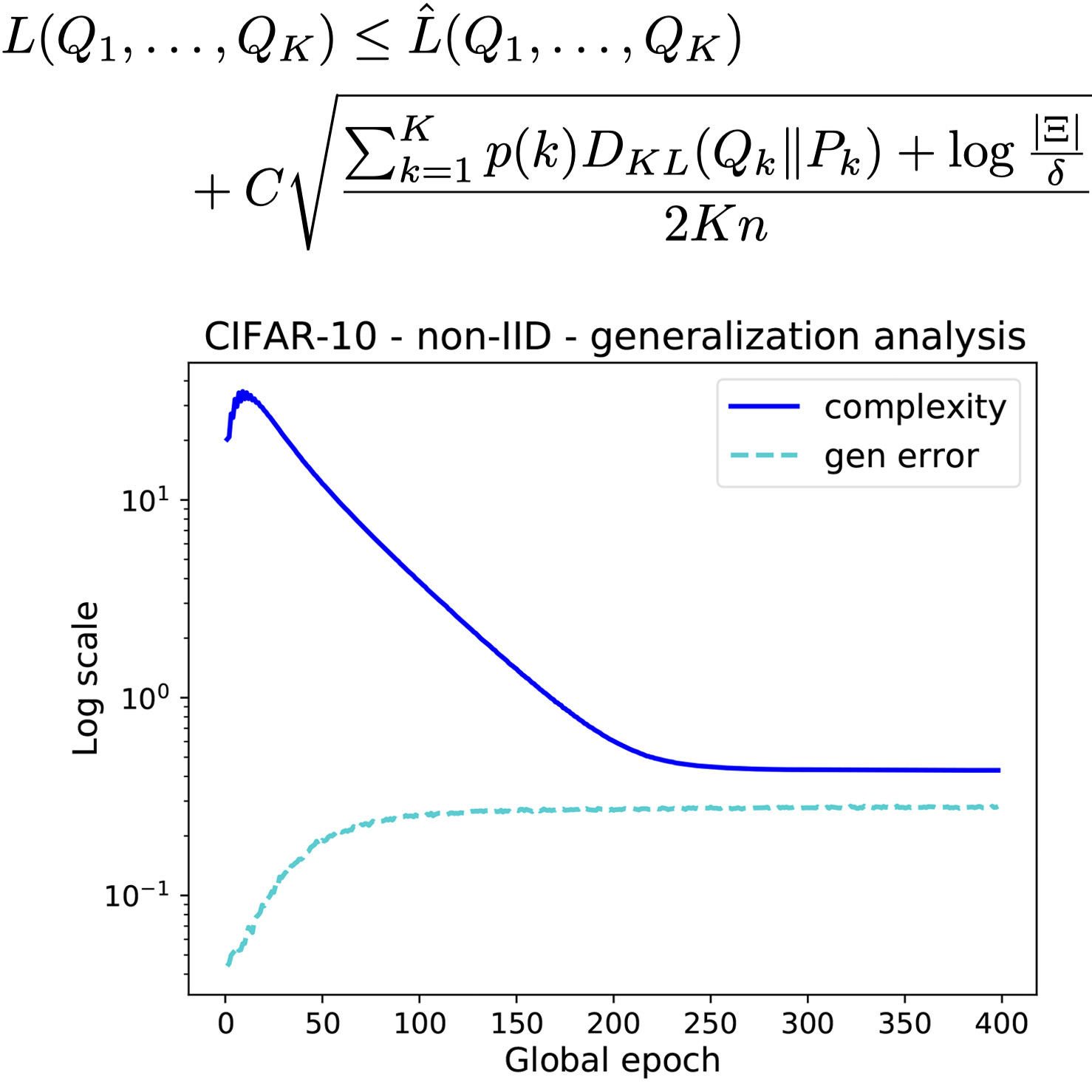

Zihao Zhao,Yang Liu, Wenbo Ding, Xiao-Ping Zhang Accepted by IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2024. We introduce a non-vacuous federated PAC-Bayesian generalization error bound tailored for non-IID local data, and presented an innovative Gibbs-based algorithm for its optimization. Tightness of the bound has been validated by real-world datasets. |

|

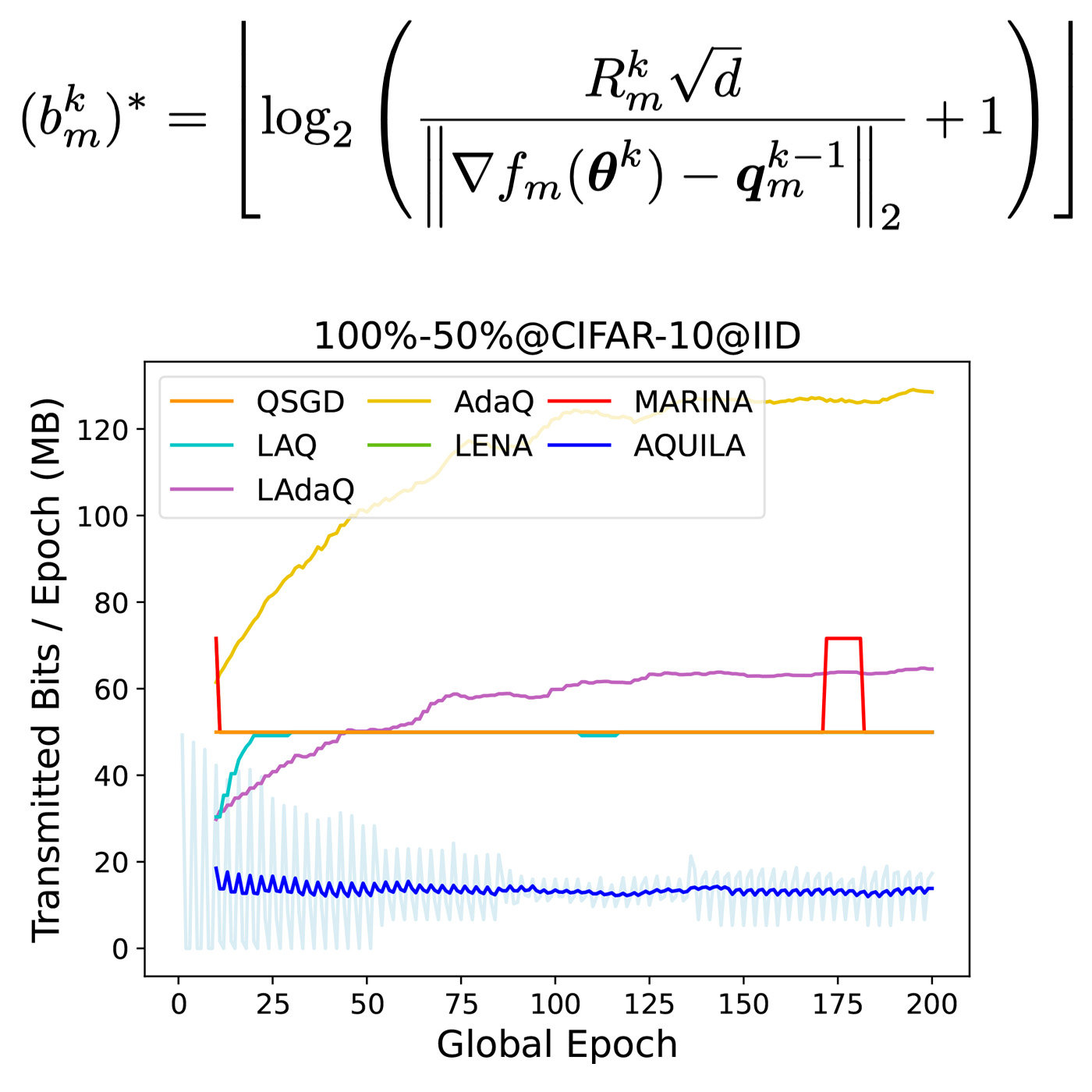

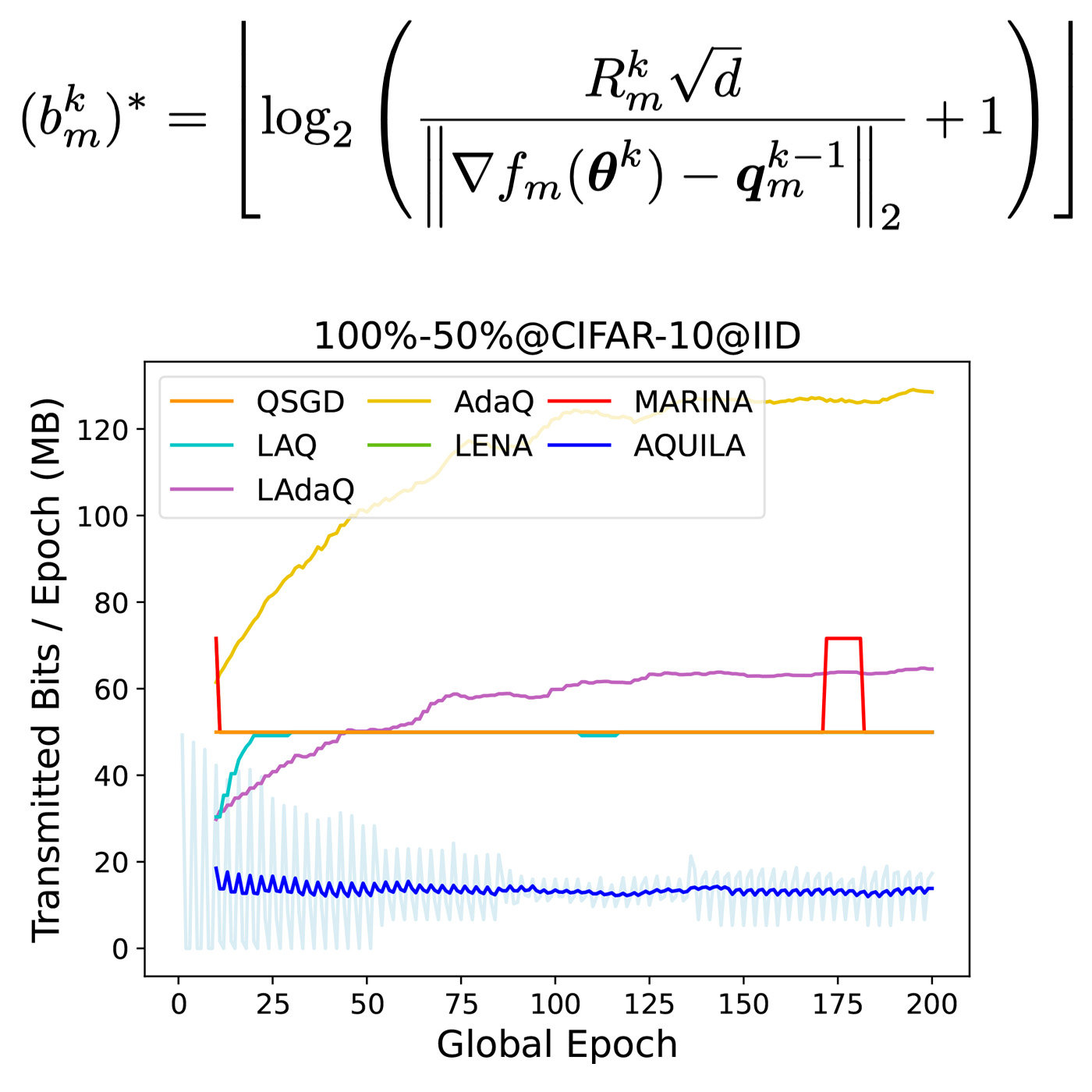

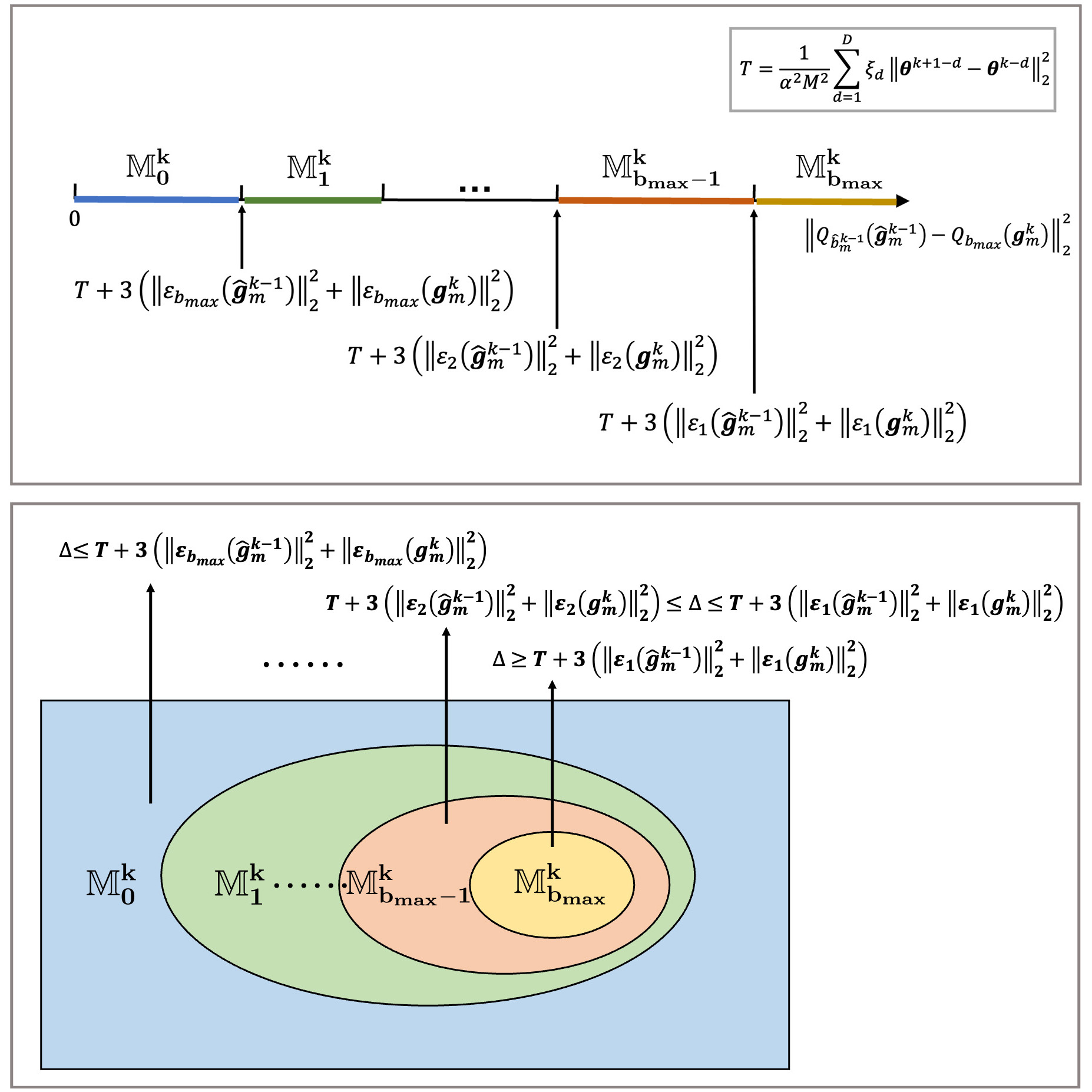

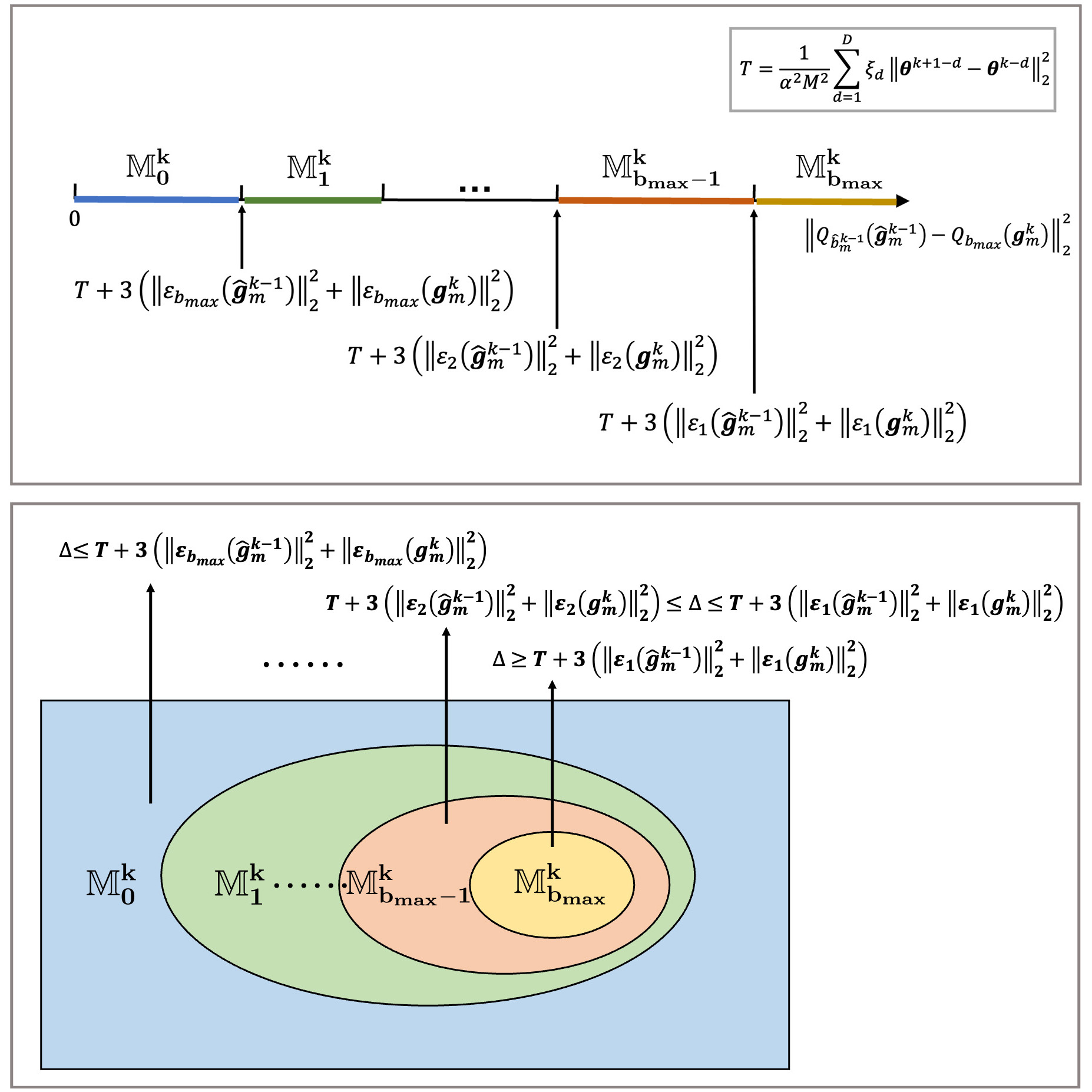

Zihao Zhao, Yuzhu Mao, Zhenpeng Shi, Yang Liu, Tian Lan, Wenbo Ding, Xiao-Ping Zhang IEEE Transactions on Mobile Computing (TMC), 2023. We craft an optimization problem to minimize the impact of skipped client updates, then derive an optimal quantization precision strategy, demonstrating comparable model performance with a 60.4% communication costs reduction on both IID and non-IID scenarios. |

|

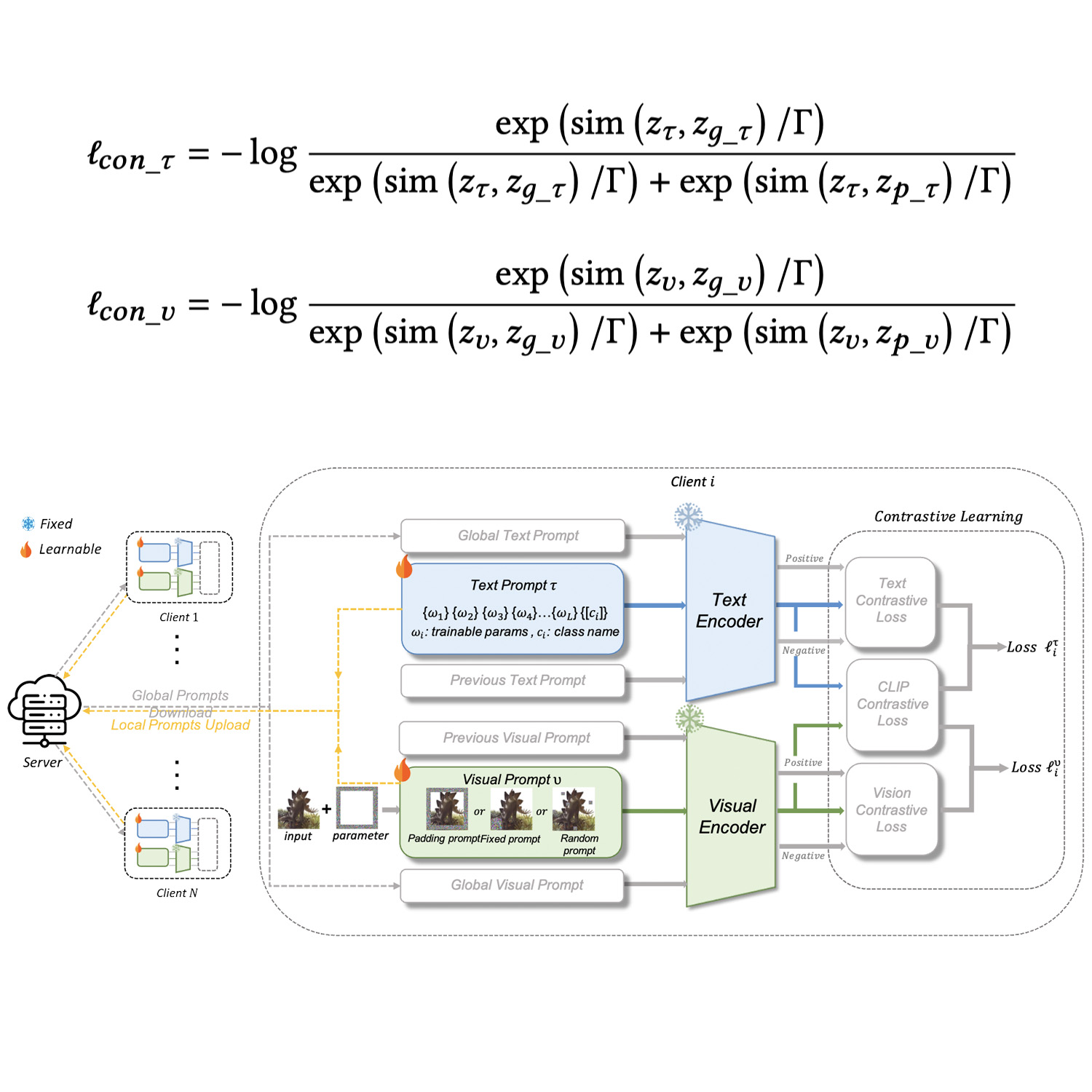

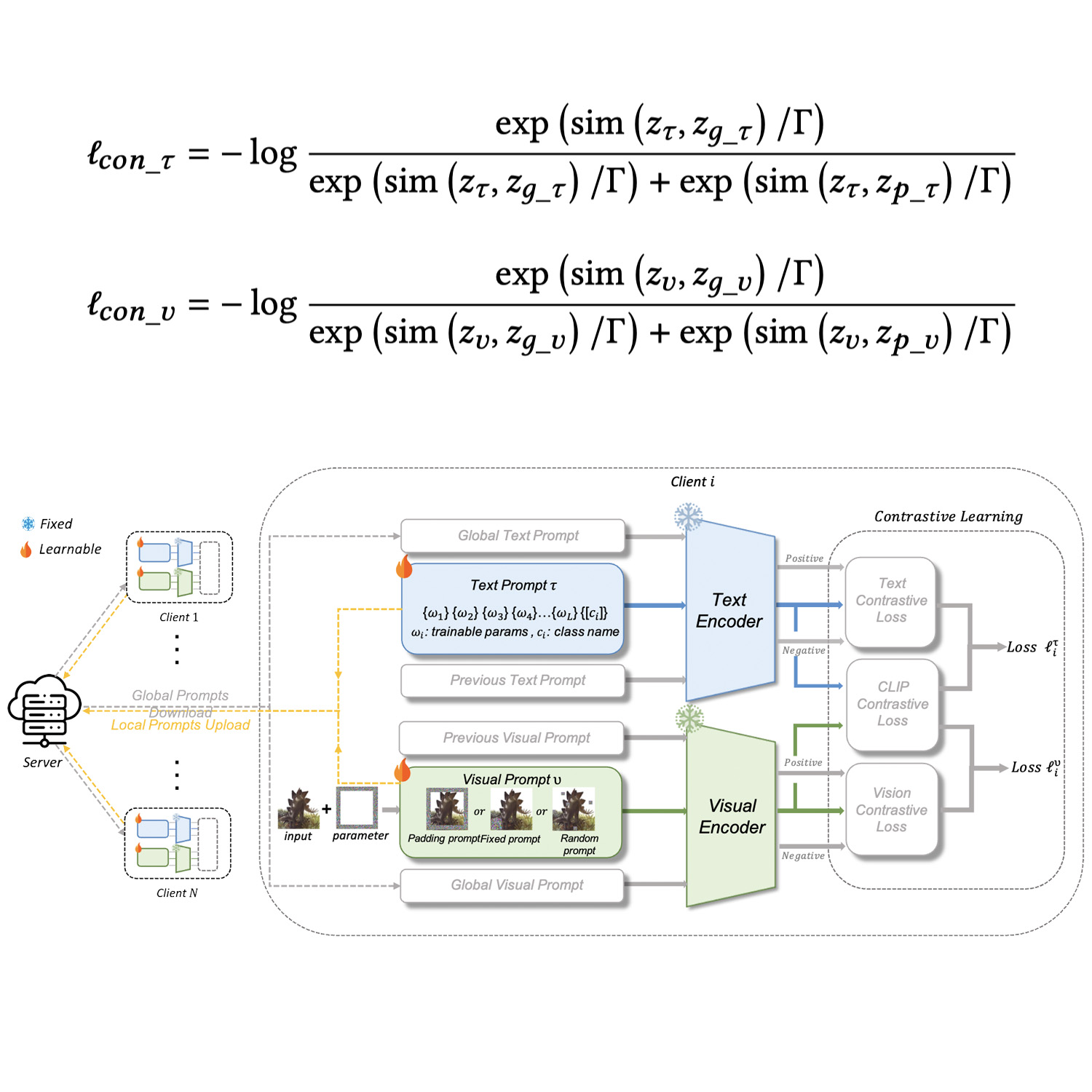

Zihao Zhao, Zhenpeng Shi, Yang Liu, Wenbo Ding Proceedings of the 2023 ACM International Joint Conference on Pervasive and Ubiquitous Computing & the 2023 ACM International Symposium on Wearable Computing (UbiComp-CPD), 2023. Oral presentation, Best Paper Runner-Up Award 🏆. We develop a twin prompt tuning algorithm -- integrating both visual and textual modalities, enhance the data representation, and achieve superior performance over all baseline models in 7 datasets. |

|

Zihao Zhao, Mengen Luo, Wenbo Ding. Conference on Parsimony and Learning (CPAL), 2024. Oral presentation. Also on IEEE East Asian School of Information Theory (EASIT), 2022. We introduce a model-based attack to recovery privacy data of users using a novel matrix Frobenius norm loss functions, achieving 92% recovery accuracy and 32% higher than gradient-based attacks. |

|

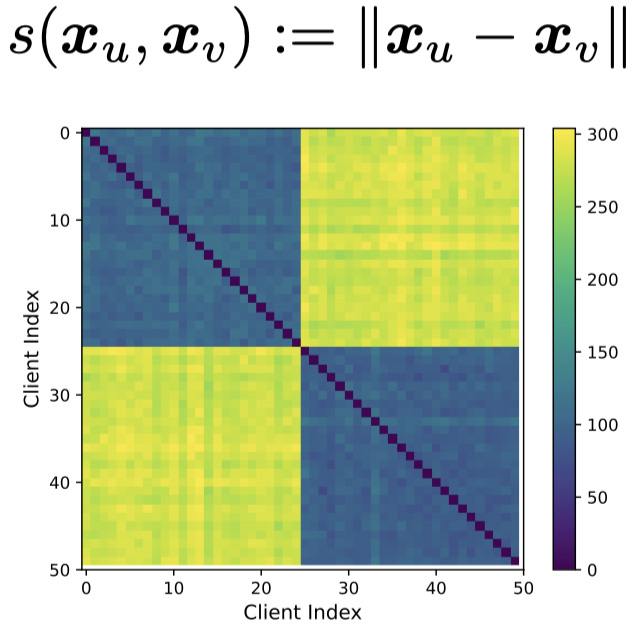

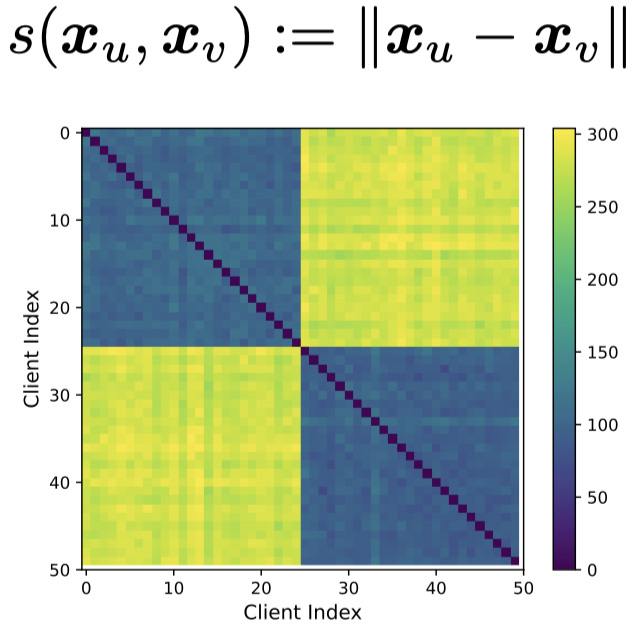

Yuzhu Mao*, Zihao Zhao*, Meilin Yang, Le Liang, Yang Liu, Wenbo Ding, Tian Lan, Xiao-Ping Zhang IEEE Transactions on Mobile Computing (TMC), 2023. We develop a sparsity-enabled framework that employs a client similarity matrix to address unreliable communications, ensuring federated learning convergence even with even with 60% weight pruning and 80% client update loss. |

|

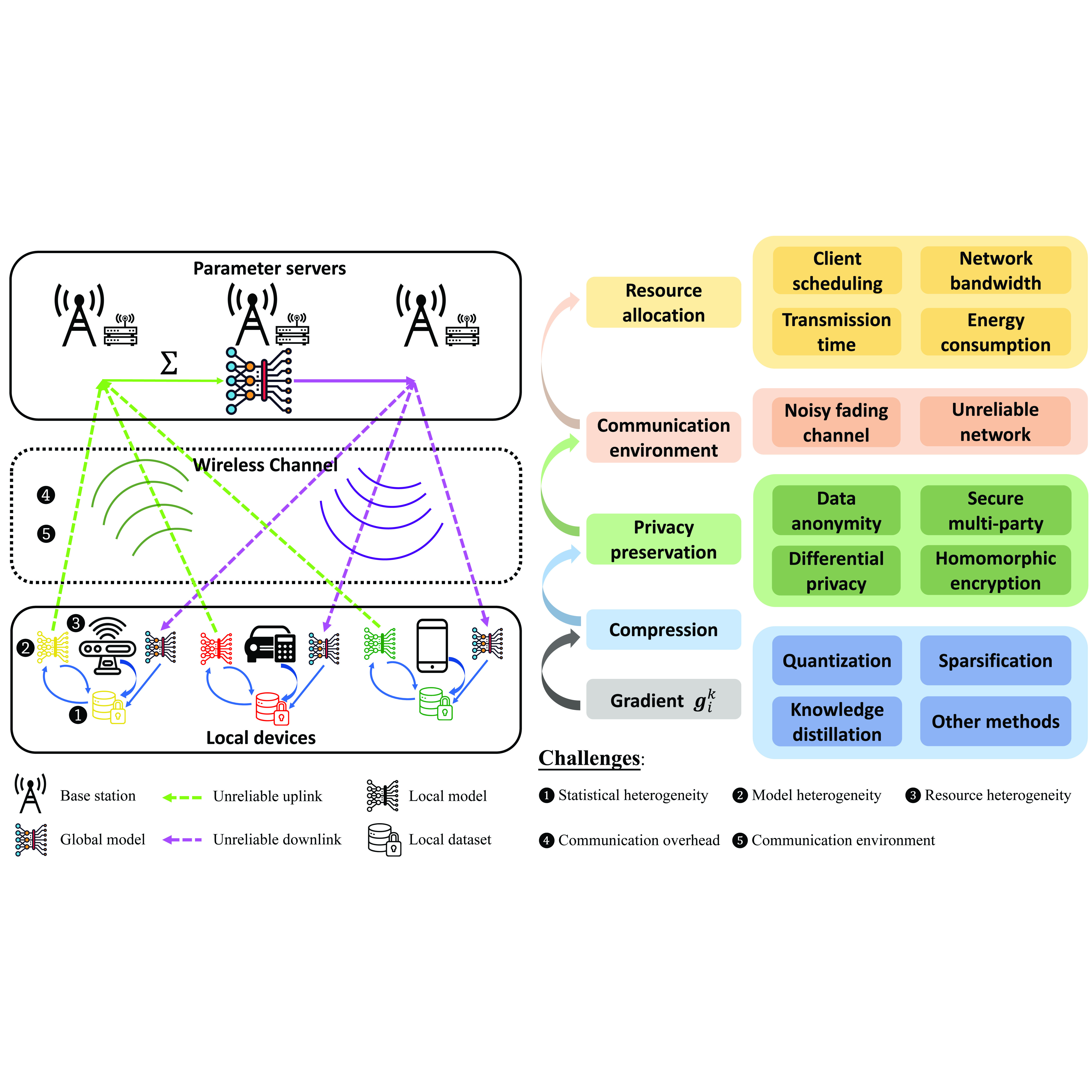

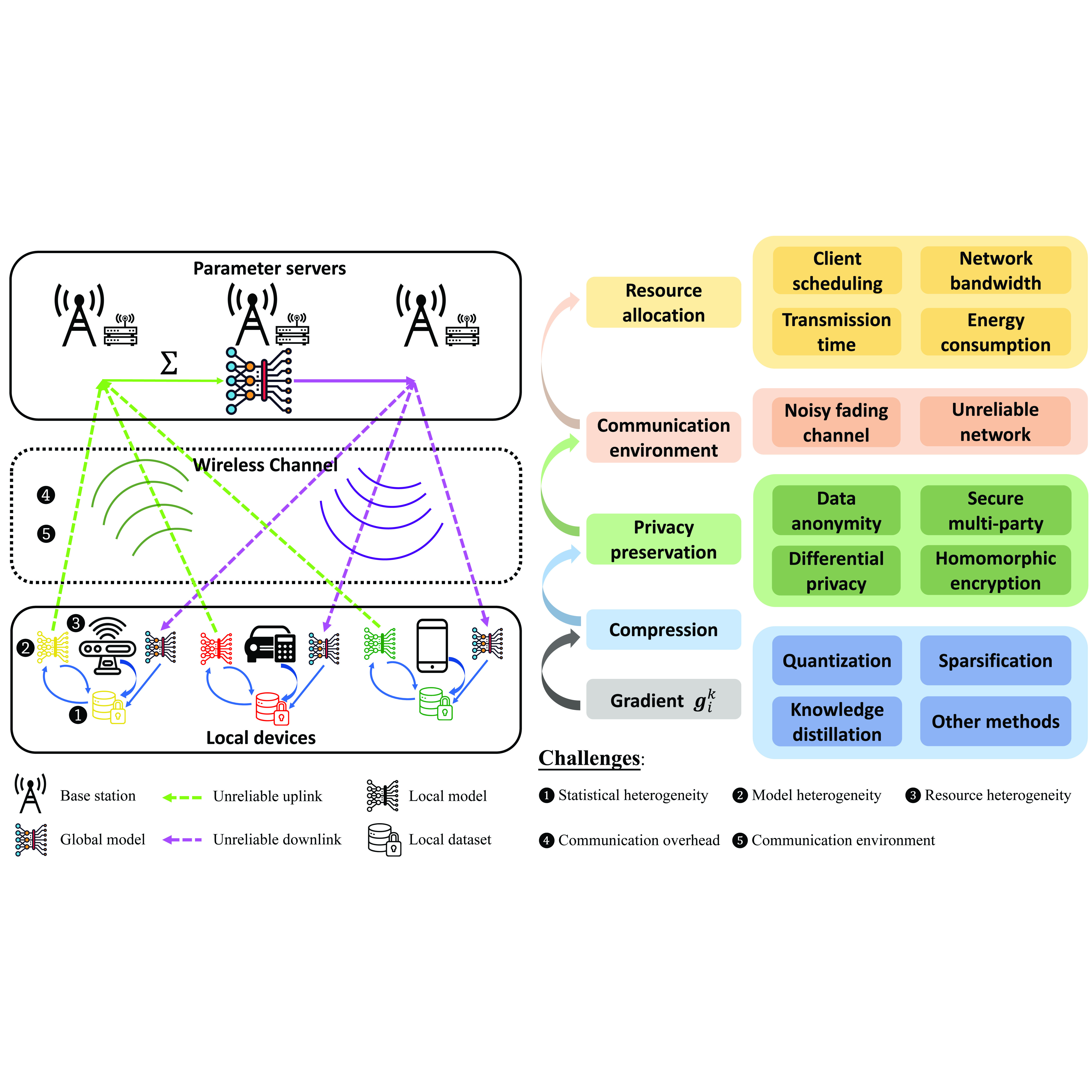

Zihao Zhao, Yuzhu Mao, Yang Liu, Linqi Song, Ye Ouyang, Xinlei Chen, Wenbo Ding Journal of the Franklin Institute, 2023. We comprehensively review the challenges and advancements in communications of federated learning, highlight improvements in communication efficiency, environment, and resource allocation, and forecast future research directions on communication-efficient FL algorithms. |

|

Yuzhu Mao, Zihao Zhao, Guangfeng Yan, Yang Liu, Tian Lan, Linqi Song, Wenbo Ding ACM Transactions on Intelligent Systems and Technology (TIST), 2022. We adjust the quantization precision for optimal precision by brute-force searching, allow a 25%-50% decrease in transmission compared to existing methods, and demonstrated resilience to up to 90% client dropout rates. |

|

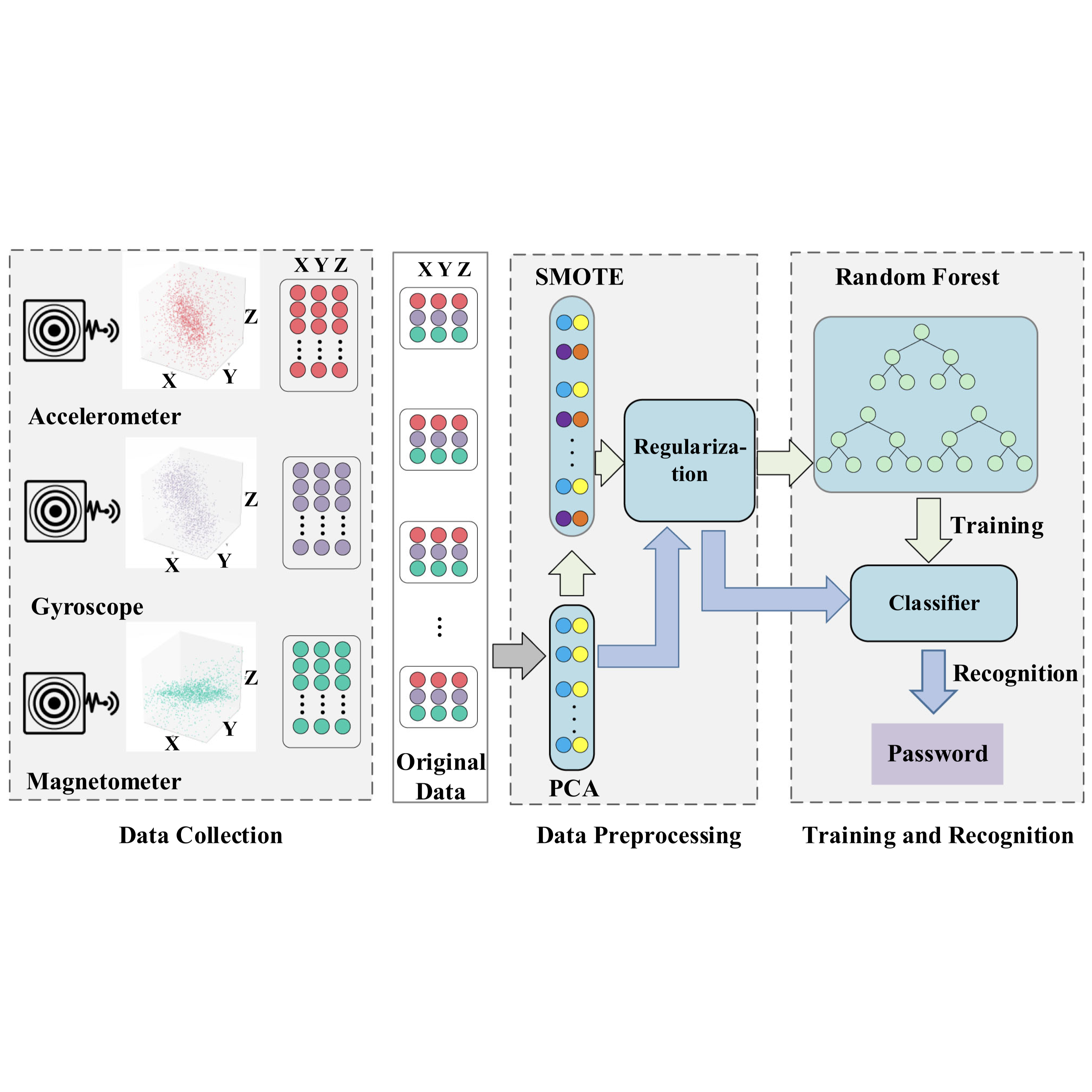

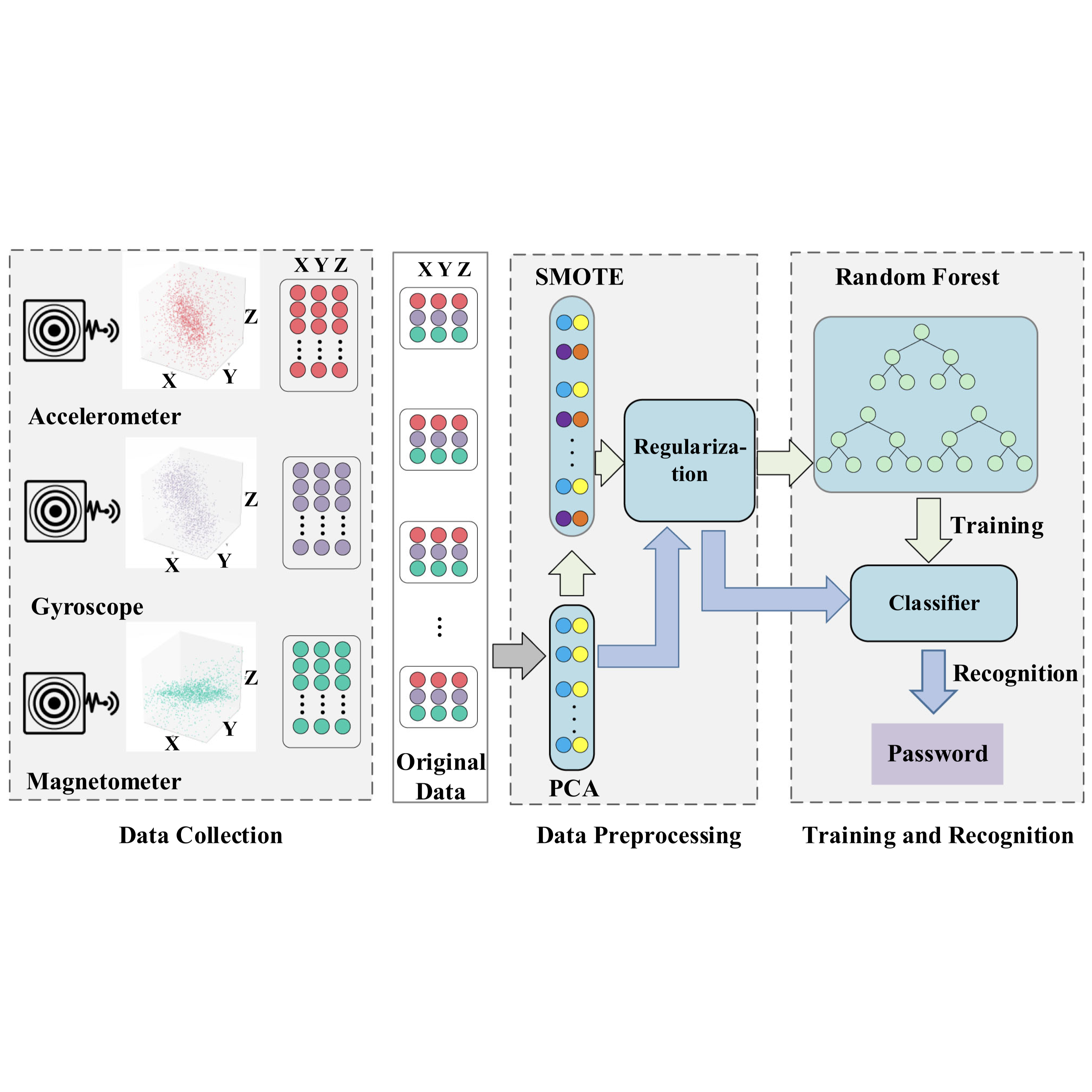

Dajiang Chen*, Zihao Zhao*, Xue Qin, Yaohua Luo, Mingsheng Cao, Hua Xu, Anfeng Liu IEEE Transactions on Industrial Informatics (TII), 2020. We develop a side-channel-based password recognition system utilizing the 3 types of smartphone sensors for password detection, surpassing previous methods with up to 98% accuracy on limited training data. |

|

|

|

Aug. 2024 - Present, Georgia Institute of Technology PhD, Computer Science |

|

Aug. 2021 - June. 2024, Tsinghua University MS, Data Science and Information Technology |

|

Aug. 2017 - June. 2021, University of Electronic Science and Technology of China, BE, Internet Security, GPA: 3.93/4.0. |

|

Tsinghua University Graduate School Comprehensive Scholarship * 2 (2021-2022, 2022-2023, First prize, Top 3%) Outstanding Graduates of Sichuan Province (2021, Top 5%) Outstanding Students Scholarship, Golden award in UESTC (2021, Top 3%) UESTC First-class Scholarship * 3 (2017-2018, 2018-2019, 2019-2020, Top 10% ) |

|

Last updated: 2026/01. This awesome template is adapted from here. |